Some of the most challenging and rewarding work we do is around crisis management teams under pressure. Whether the pressure is real or simulated, we get to watch leaders perform in a ‘pressure cooker’, often with interesting results. The ‘pressure cooker’ tends to amplify some behaviours. We’ve been looking at how bias is amplified and would like to share some examples as they occur throughout a typical crisis management meeting.

Crisis Management Process

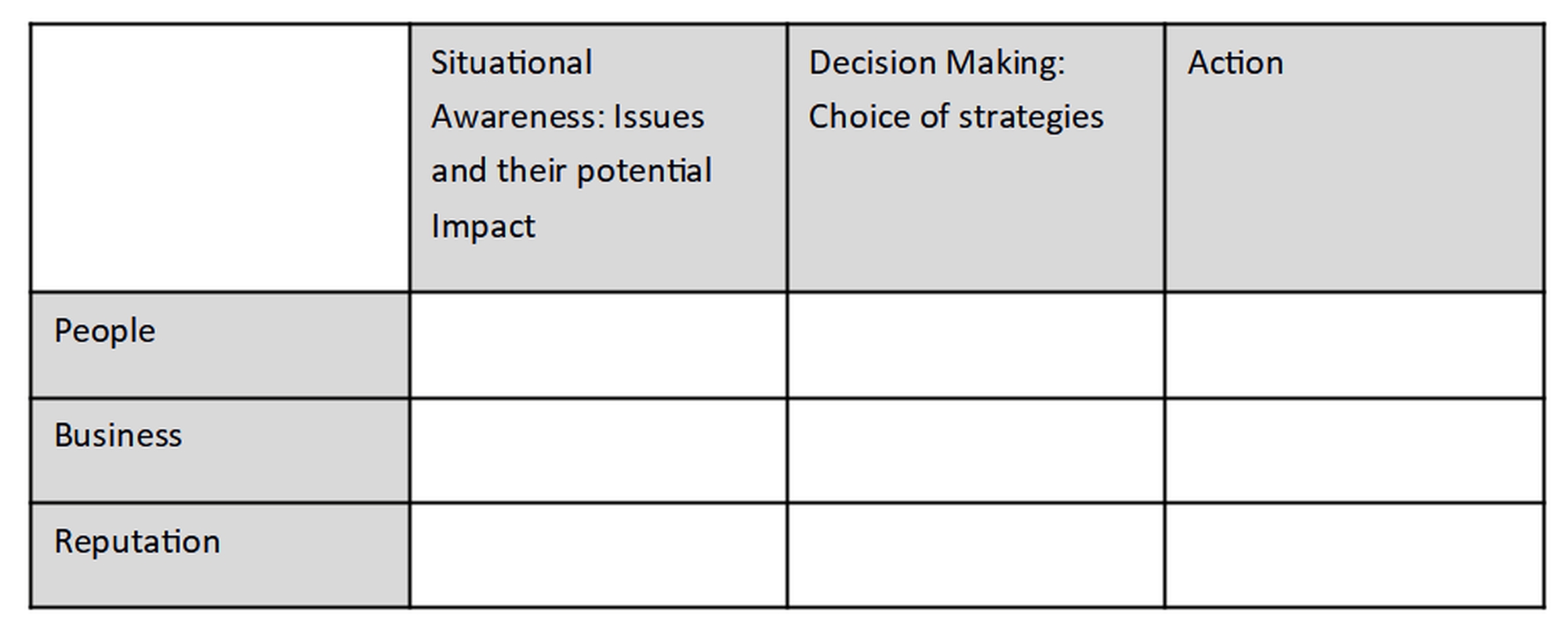

Here at Eddistone we have a simple process for crisis management that follows the UK Concept for Emergency Management: Situational Awareness, Decision Making and Action.

In this article we discuss some of the biases we notice in crisis teams as they work through this process. These biases fall into one of three categories described in Mueller and Dhar’s excellent book The Decision Maker’s Playbook (Pearson, 2019). In essence, first we simplify, then we try to make sense of what we are observing and lastly we stick to the view we have formed. These three overarching themes manifest themselves in specific biases we’ve observed as teams work through the stages of the crisis management process.

Biases impacting on Situational Awareness

As the crisis team sifts the key issues from incoming information, they often show confirmation bias, looking for evidence to support what they already believe. This is complicated further by anchoring bias, where they put disproportionate weight on the first information they received. Sometimes we anticipate this and deliberately inject contradictory information, which they often fail to pick up and give due attention. These biases can have a remarkably powerful effect on normally methodical, rational thinkers.

The next phase in a crisis meeting looks at the potential impact of each issue on the people, business and reputation of the organisation. Most people have heard of optimism bias and it is fair to say that executives would not be where they are today without an optimistic outlook, but crisis is not business-as-usual. Optimism can be made worse by normalcy bias, which is our tendency to minimise the threat posed by a situation. Pessimism bias is no more helpful than the others: we need to establish a plausible range of outcomes.

More subtle, but equally dangerous, is the certainty effect. Some people are uncomfortable holding a range of plausible outcomes. They tend to look for a trend, a baseline or a prediction, at the expense of the full picture. This is an example of our instinct for pattern recognition; for sense-making, which serves us well most of the time, but hijacks us when facing complex, cognitive problems.

Biases impacting on Decision Making

When the team looks at strategies to deal with the issues, we often see a reluctance to evaluate a range of options and a tendency towards Functional Fixedness. As Abraham Maslow said “if all you have is a hammer, everything looks like a nail’. And there’s my personal favourite, the Bike shedding effect, otherwise known as Parkinson’s Law of Triviality. I challenge any committee member not to wince in recognition.

Biases impacting on Action

In the final phase of our crisis management process, generating and commissioning actions, we see two conflicting tendencies. On the one hand, a tendency for team members to revert to their comfort zone, which might be an operational role. For example, a CEO who says ‘I designed that oil rig and I need to speak to the installation manager’. On the other hand, a tendency to disassociate from implementation; to get stuck on conceptual thinking, reluctant to move on to a decision, let alone action. I haven’t found names for these yet, but if there may be a psychologist out there who knows!